Enterprise Workflow Orchestration with Agentic AI

Abstract

Agentic Artificial Intelligence (AI) is redefining how enterprises and government agencies approach workflow automation. Unlike task-specific bots or stand-alone agents, agentic systems are composed of autonomous, context-aware agents that collaborate to perceive, reason, and act across entire processes. These systems can adapt to changing missions, enforce compliance rules, and maintain transparency—capabilities that are critical in highly regulated environments.

This paper examines the foundations and applications of agentic workflows, exploring frameworks for multi-agent orchestration, secure access control, and built-in guardrails for compliance and auditability. Drawing on Contextual Engineering’s digital transformation expertise and practical development of agentic pipelines, we demonstrate how these systems can deliver measurable improvements in efficiency, security, and mission agility. We conclude with a call to action for agencies seeking to pilot and scale agentic AI solutions in partnership with Contextual Engineering.

Background: From Stand-Alone Agents to Agentic Systems

Government agencies operate in environments that demand efficiency, compliance, and adaptability. Traditional automation approaches such as business process modeling or robotic process automation (RPA) capabilities have delivered incremental improvements but often lack the flexibility to adapt to evolving mission needs. Agentic AI represents the next evolution of enterprise workflow modernization, providing systems that not only automate tasks but also reason, adapt, collaborate, and make autonomous decisions across end-to-end processes.

The promise of agentic AI lies in its ability to integrate decision making, enforce compliance, and orchestrate workflows within a secure and reproducible framework—capabilities that align closely with government priorities for accountability and mission agility.

Artificial Intelligence in workflows has historically focused on stand-alone agents—software components designed to perform specific, narrow tasks. While effective in isolation, these agents often fail to adapt when workflows change, policies evolve, or data sources shift.

Agentic systems address these limitations by deploying multiple autonomous agents that collaborate and contextualize data across the enterprise. Unlike static automation, agentic systems can:

Orchestrate across multiple systems and functions

Adapt to evolving policies, regulations, and data inputs

Enforce compliance through embedded rules and guardrails

Provide end-to-end process visibility

This distinction between task automation and agentic orchestration is particularly significant for government agencies, where workflows must remain agile yet accountable to mission and regulatory requirements.

The Core Capabilities of Agentic Workflows

Agentic workflows are dynamic, distributed systems composed of autonomous agents that collaborate to achieve mission outcomes. Rather than functioning through a rigid set of architectural layers, these workflows operate through a network of agents that are able to be shaped based on the role they are given. Their strength lies in a set of interdependent capabilities that define how intelligent, secure, and adaptive the workflow can be.

Contextual Perception

Agents continuously gather and interpret data from their environment, namely user inputs, API calls, and databases of documents, visual images, or audio & video to form a real-time understanding of the operational context. This contextual perception ensures that every decision and action is mission-aware and grounded in current conditions.

Example: a procurement agent retrieves the latest policy updates and budget data before initiating a contract approval sequence, ensuring compliance from the outset.

Adaptive Reasoning

Each agent employs reasoning capabilities to interpret information and make decisions. Through techniques such as chain-of-thought reasoning or graph-based orchestration (e.g., LangGraph), agents plan and coordinate actions with other agents in real time. Reasoning may occur individually or collaboratively, with specialized agents debating or verifying each other's conclusions.

Example: a compliance agent validates contract terms against FAR guidelines while a budget agent checks funding thresholds, and both adjust plans based on mutual reasoning.

Coordinated Action

Reasoning leads to action, where agents execute tasks through tool and API calls to enterprise systems. In agentic frameworks this coordination occurs through an orchestration graph, allowing for directed and cyclical structure between nodes (agents or tools), enabling multiple agents to perform tasks and exchange state information seamlessly.

Example: once approvals are complete, agents can automatically generate documents, route them for signatures, and update records across enterprise platforms.

Continuous Learning and Improvement

Agentic systems evolve through feedback. They learn from execution outcomes, audit results, and user interactions to refine reasoning strategies, prompt formulations, or workflow routing. Learning may be automated via reinforcement or meta-learning or managed through periodic human-in-the-loop oversight.

Example: after several grant cycles, an allocation agent identifies patterns that cause delays and proactively adjusts task assignments to reduce turnaround time.

Framework

Prior to implementing agentic workflows within our enterprise ecosystem, it is important to establish a risk framework and governance strategy with which we will provide guardrails to design our system. With the novelty of AI in terms of its enterprise usage, there is no one universally accepted framework, but we propose to comply with the standard established by NIST, AI Risk Management Framework.

The NIST AI RMF provides a comprehensive structure for identifying, assessing, and managing AI-related risks across technical, organizational, and societal dimensions. Its purpose is to ensure that AI systems are developed and deployed in ways that are trustworthy, transparent, and aligned with enterprise values. We adapt its principles to guide our use of agentic AI, focusing on autonomous systems that act, learn, and make decisions within human-defined parameters.

The NIST AI framework is anchored on achieving outcomes through four core functions: Govern, Map, Measure, and Manage.

Govern – Establish a culture of accountability and transparency in AI decision-making. This includes defining clear ownership of agentic systems, documenting decision boundaries, and ensuring human-in-the-loop oversight for critical business functions. Governance policies will align with existing enterprise risk, compliance, and ethical standards, extending these principles to agentic systems that act with varying degrees of autonomy.

Map – Identify the context, goals, and operational boundaries of each agentic AI workflow. Before deployment, assess potential impacts—both beneficial and adverse—across business processes, employees, customers, and partners. This step ensures that the purpose and scope of the agent’s autonomy are well understood, the data sources are validated, and the potential interactions with human and digital systems are fully mapped.

Measure – Quantify and evaluate trustworthiness and performance using defined metrics. For agentic AI, measurement includes continuous monitoring of reliability, safety, fairness, interpretability, and alignment with human intent. Each system should undergo rigorous testing and simulation prior to deployment, with key performance indicators linked to ethical and operational outcomes.

Manage – Implement a continuous improvement and mitigation process for AI risks. Managing agentic AI involves active monitoring, incident response protocols, and structured feedback loops to adjust system behavior over time. Maintain a registry of known and residual risks, ensure compliance with data protection and privacy laws, and maintain pathways for human intervention and redress in cases of failure or unintended outcomes.

By embedding these principles into our enterprise architecture, we aim to create trustworthy, resilient, and accountable agentic systems that enhance human capability rather than replace it. This framework enables us to drive operational efficiency and competitive advantage while safeguarding against ethical, reputational, and regulatory risks.

Discovery & Use Cases

To determine whether agentic AI can deliver measurable value within an enterprise, it is essential to identify high-friction workflows and bottlenecks in the current or proposed process. This begins with mapping all entities involved— people, systems, or data sources—and clearly enumerating the inputs, outputs, and functions associated with each. Once these players are understood, we can assess whether agentic AI meaningfully improves their efficiency by asking some questions aligned with agentic core capabilities:

Do we need help synthesizing information across disparate data stores?

Do we require faster, clearer decision-making?

Are communication delays between stakeholders slowing execution?

If such challenges (or those adjacent) exist, agentic workflows may offer significant benefit. However, recognizing the need for agents alone is not enough—organizations must also consider the complexity and readiness required to implement and transition to an agentic system.

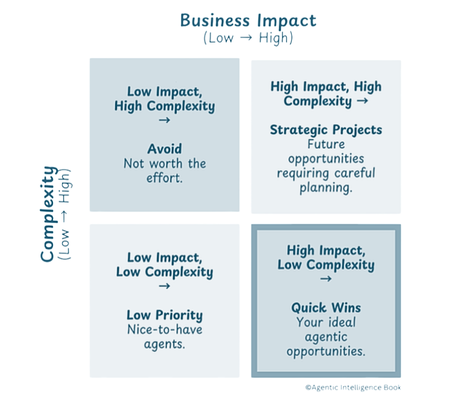

Figure 1: The Agentic Opportunities Prioritization Matrix

Credit: Agentic Artificial Intelligence, Harnessing AI Agent to Reinvent Business, Work, and Life by Pascal Bornet

To evaluate which agentic AI opportunities are worth pursuing, we must consider both the business impacts identified earlier and the complexity required to implement them. Prioritization matrices commonly used to assess task importance, can be applied in the same way to agentic systems. Prioritization matrices help categorize potential initiatives along two dimensions: impact and complexity. The goal is to avoid high-complexity systems that deliver minimal value and instead focus on agentic workflows that present manageable complexity while offering strong business upside and outcomes. This prioritization framework is useful both for transitioning from legacy systems to agentic architectures and for designing entirely new agentic systems from the ground up.

Execution

After determining that agentic AI is appropriate for our enterprise system, we begin the implementation of such a system by adopting a standard operating procedure to allow for reproducibility. Adapting the principles of the Software Development Life Cycle (SDLC) to the Agentic AI Lifecycle [1], we structure around six iterative stages: Planning, Design, Development, Testing, Deployment, and Maintenance.

Stage 1: Planning

The planning stage defines the foundation for the agentic system. We must determine our goals and how we achieve them in accordance with our enterprise resources and guidelines.

Define Business Needs and Agent Objectives – Identify enterprise processes suitable for agentic automation, focusing on measurable impact areas such as decision acceleration, compliance assurance, or workflow optimization.

Resource Allocation – Determine required data, computational resources, APIs, and human oversight.

Risk and Ethics Review – Conduct an initial risk assessment aligned with the NIST AI RMF, evaluating potential bias, data sensitivity, safety, and governance concerns.

The outcome of this stage is a documented implementation plan describing agent roles, autonomy levels, and performance expectations.

Stage 2: Design

This stage translates conceptual goals into a technical architecture for the agentic system. The specifics of the agentic architecture are determined along with defining any full-stack architecture needed to complete the application.

Select Models and Frameworks – Choose appropriate foundational models and libraries needed based on the task and orchestration frameworks like LangChain, CrewAI, or custom middleware.

Grounding with Context – Identify data sources, domain knowledge, and external systems each agent will interact with.

Design Guardrails – Define the boundaries of agent autonomy, escalation triggers, and human-in-the-loop checkpoints.

Compliance by Design – Integrate security, privacy, and traceability principles into every design layer.

The deliverable is a blueprint of the agentic architecture, including communication protocols, data flow diagrams, and governance controls.

Stage 3: Development

This is the system-building stage where our theoretical workflow becomes tangible. We are able to put our planned agent workflow into place and create connections where the data will be stored.

Build Agent Logic – Implement individual agent functions, prompt engineering strategies, and goal hierarchies.

Integrate Models and Tools – Connect perception, reasoning, and action layers through APIs and shared context stores.

Fine-Tune and Configure – Adjust parameters, embeddings, or decision thresholds to align behavior with enterprise objectives.

Document Setup – Maintain clear technical documentation for reproducibility and auditability.

At the end of this stage, each agent should operate independently in a sandboxed environment with defined responsibilities and interaction boundaries.

Stage 4: Testing

Testing verifies that the system functions as intended and validates its ability to meet enterprise requirements. Prior to deploying to our user base, the architecture will be thoroughly evaluated on a smaller, but representative, scale.

Verification and Validation (V&V) – Confirm that agent behavior matches design intent (verification) and achieves desired outcomes in representative conditions (validation).

Functional and Emergent Testing – Evaluate both individual agent performance and multi-agent coordination, monitoring for drift, context loss, or unanticipated behaviors.

User Experience & Integration Testing – Conduct trials with synthetic or pilot data to evaluate system responsiveness, transparency, and usability.

Performance Benchmarking – Assess scalability, latency, and interpretability against baseline metrics.

Testing continues iteratively until accuracy, reliability, and compliance standards are consistently met across representative scenarios.

Stage 5: Deployment

After successful testing, the agentic system is deployed within the enterprise environment. As we do so, we begin tracking important performance and security measures.

Launch and Observe – Deploy agents with continuous observability dashboards and telemetry.

Governance Enforcement – Validate compliance with Zero-Trust, RBAC/ABAC policies, and data handling standards (HIPAA, FedRAMP, etc.).

Auditability and Logging – Ensure all agent actions are timestamped, traceable, and recorded for future inspection.

Scalability and Modularity – Leverage reusable components and configuration-driven orchestration for rapid extension across departments.

During the transition, legacy systems remain available for redundancy until the agentic ecosystem demonstrates stable performance and trustworthiness. Change management is essential: staff must be equipped to collaborate effectively with autonomous systems, ensuring alignment between human oversight and machine agency. Deployment emphasizes controlled autonomy where agents act independently within guardrails but remain transparent and governable.

Stage 6: Maintenance

The final stage ensures the system remains adaptive, reliable, and aligned with organizational goals. We continuously improve the system based on the metrics tracked previously and how they align with our goals.

Monitor and Optimize – Continuously monitor agent performance, detect drift, and optimize prompt strategies or model weights as conditions evolve.

User Feedback Integration – Collect and act upon operator and stakeholder feedback to enhance usability and reduce friction.

Lifecycle Management – Update models, retrain agents, and refresh context sources as enterprise data and objectives evolve.

Maintenance turns deployment into a living system — continuously improving and scaling through learning loops.

Conclusion and Call to Action

Agentic AI represents the next logical step in government digital transformation—one that moves beyond task-level automation to intelligent, mission-aligned orchestration. It increases productivity and automates repetitive tasks, allowing government stakeholders to focus on advancing their agency’s core mission and priorities. By combining autonomous decision-making with secure access controls and compliance guardrails, agentic workflows accelerate the outcomes.

Contextual Engineering is uniquely positioned to help agencies pilot and scale agentic AI solutions automating workflows and delivering measurable outcomes. We invite government stakeholders to partner with us in exploring practical pilot opportunities, establishing governance frameworks, and deploying secure, scalable agentic workflows tailored to mission-critical needs.

[1] Adapted from Rakesh Gohel’s 6-Stages of AI Agent Development